Longer videos with Stable Video Diffusion via YaRN

Background

Text-to-video diffusion models have started to mature massively in the last few months; starting roughly with Gen-1 and Pika, and increasing in popularity in open-source with AnimateDiff and now Stable Video Diffusion.

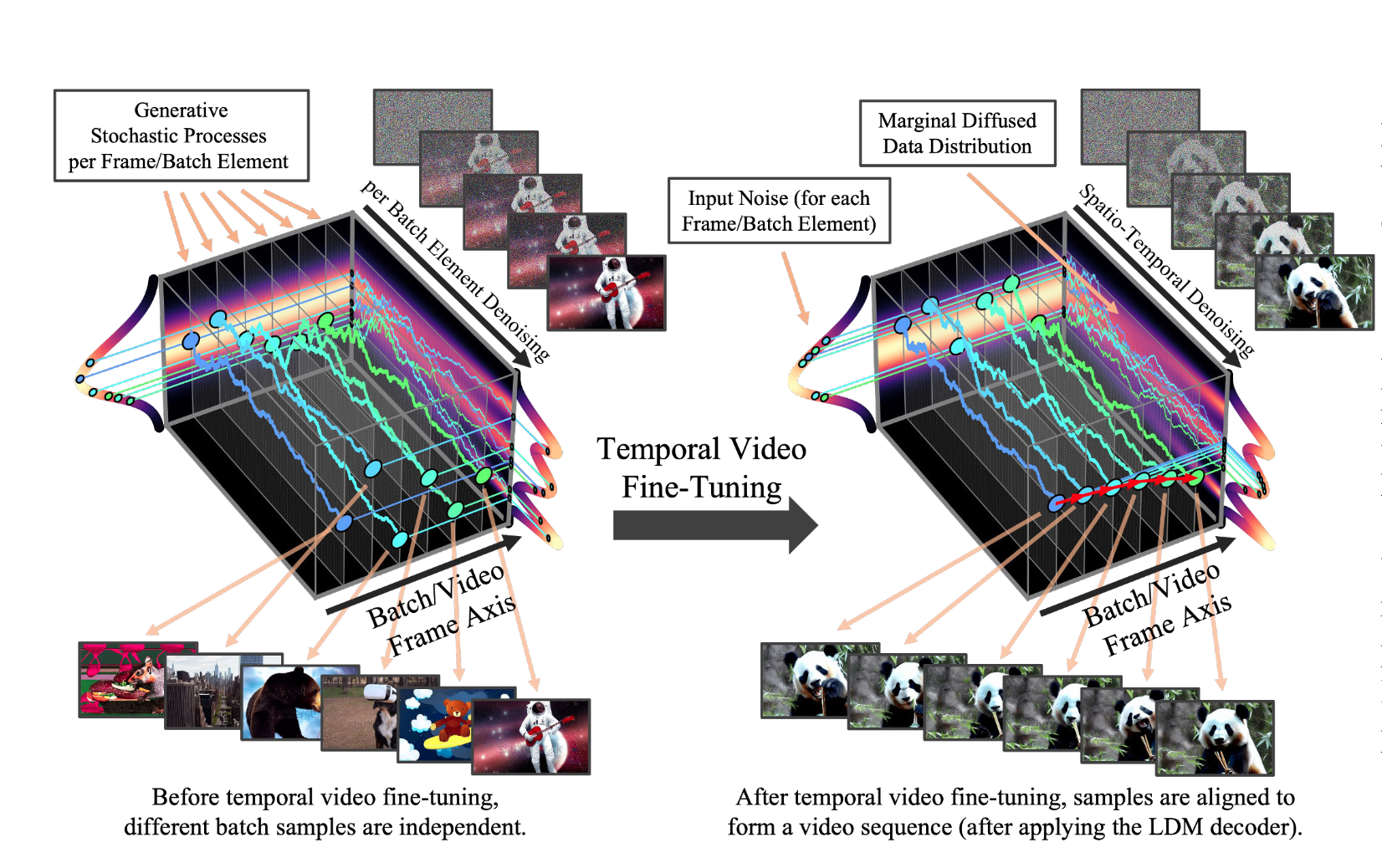

In most video diffusion models, including Stable Video Diffusion this is because the diffusion process is being applied across the entire batch; you can think of SVD inference as denoising a batch of latents concurrently, as done in text-to-image diffusion models; though in this case, the U-net is attending to all latents at once (technically, through separate temporal and spatial layers). As you can imagine, this is very compute-intensive; this also means the model has only seen videos up to a few seconds long (though at different framerates), due to training costs increasing with batch size. If you try to generate longer videos, i.e. past 14 frames with SVD and 25 with SVD-XT, the coherence and motion suffer.

Diagram from Align your Latents depicting the inference process used in SVD.

Technique

I won't go into all the details of the SVD architecture, but one part that stuck out to me is its use of positional embeddings; in SVD's case, they refer to the temporal location of a single latent within the batch. This reminded me of the positional encodings used in large language models, which serve a similar role: for each token, it provides a unique 'position' within the sequence that the model uses to distinguish the relative location of each token. Similar to the issue with video models having never 'seen' longer videos, a similar issue arises with language models: they are trained with a fixed context length, so position embeddings aren't learned for longer sequences.

To combat this, a technique called YaRN was developed: Scale the positional embeddings, so the maximum position embedding represents position 512, position 256 becomes position 128, and so on. Given an LLM with a context window of 512 tokens, this means you can now generate sequences of length 1024, with the model thinking it's only generating a sequence of length 512 - which it would have seen before. While not as effective as fine-tuning on longer sequences, this works surprisingly well; subsequent work has then fine-tuned LLMs with this technique, to further improve performance.

So, I took the same technique and applied it to SVD; scaling the positional embeddings by a factor of 1/2, I was able to generate videos twice as long as the model had ever seen before. This was a simple change to the model, and required no additional training; SVD was now able to generate coherent videos up to 80 frames in length, where it had originally been trained for only 25 frames. Going further, I added some techniques from the FreeNoise paper to cut down on inference time by windowing temporal self-attention.

Results

48 frames with base SVD (trained for 12 frames) without position interpolation

48 frames with base SVD with position interpolation

To be honest, it works OK. I was expecting massive improvements; but the model is still very sensitive to the scaling value and other parameters, such as the motion_bucket_id and CFG scale. It works best for pans, zooms, and other smooth camera movements; but for more complex scenes, this technique still struggles. I think this is a good first step, but there's still a lot of work to be done to get this to work well in practice. That being said - for a technique that required no additional training, it's nice to see it work at all.

You can try this with the Comfy node I've published here. Let me know if this is helpful and if you're interested in improving on this, contact me via the links below!